I can add some additional variation to each theme by messing with pseudo selectors. This lets me override or substitute CSS rules pretty trivially. There are a handful of differently colored themes for each visualization, and I do some rudimentary CSS namespacing by updating a class applied to the html element. Since I'm using D3 - which is just drawing SVG - I was able to style everything in CSS (no images are used at all, including icons).

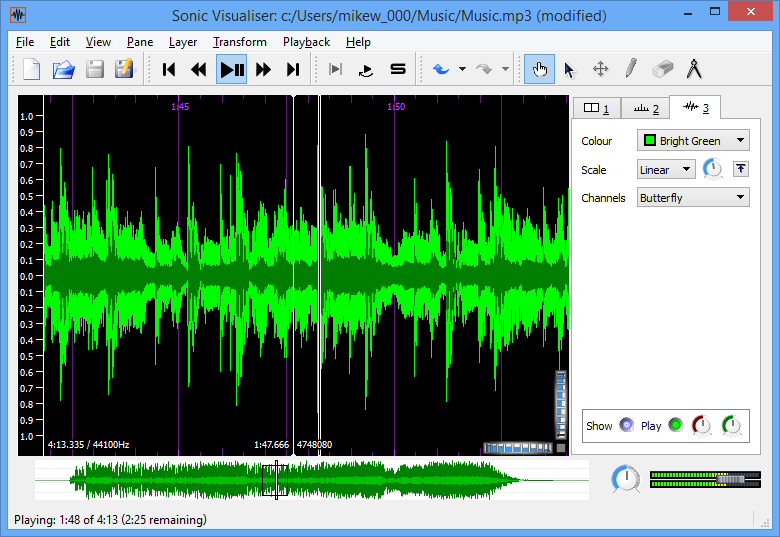

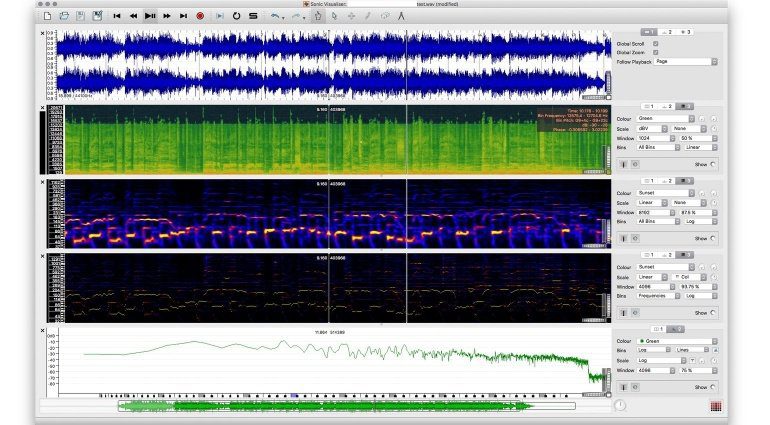

#SONIC VISUALISER SEPERATE WAVE FORMS TRIAL#

Each visualization uses the data a bit differently - it was mostly trial and error to get some stuff I liked looking at. I'm using d3.js to draw and redraw SVG based on this normalized data. I then normalize the data a bit (or transform it slightly depending on the visualization) and redraw the screen based on the updated array. Then, using requestAnimationFrame (with a little frame limiting for performance reasons) I'm updating that array as the music changes.

#SONIC VISUALISER SEPERATE WAVE FORMS HOW TO#

There's a good tutorial on how to do this. Using the web audio api, I can get an array of numbers which corresponds to the waveform of the sound an html5 audio element is producing. Party-mode - An experimental music visualizer using d3.js and the web audio api. The proposed model is based on the Demucs architecture, originally proposed for music source-separation: (Paper, Code).

Additionally, we suggest a set of data augmentation techniques applied directly on the raw waveform which further improve model performance and its generalization abilities.

Empirical evidence shows that it is capable of removing various kinds of background noise including stationary and non-stationary noises, as well as room reverb. It is optimized on both time and frequency domains, using multiple loss functions. The proposed model is based on an encoder-decoder architecture with skip-connections. In which, we present a causal speech enhancement model working on the raw waveform that runs in real-time on a laptop CPU. We provide a PyTorch implementation of the paper: Real Time Speech Enhancement in the Waveform Domain. Denoiser - Real Time Speech Enhancement in the Waveform Domain (Interspeech 2020)We provide a PyTorch implementation of the paper Real Time Speech Enhancement in the Waveform Domain

0 kommentar(er)

0 kommentar(er)